Vincent Sanders: Raspberries are not the only fruit

I have worked with ARM based systems for longer than I care to admit to myself. From the Acorn Archimedes 305 in 1987 through to modern 64bit systems I have seen many many changes in the ARM community. One big change has been the rise of the inexpensive single board computer (SBC).

Arguably the Raspberry Pi (RPi) was responsible for starting this trend. Before RPi there were small development boards available, I was even involved in producing some of them, none of these really became a big thing and were principally vehicles for silicon vendors to showcase their SoC in an accessible way. When I say accessible I mean for silicon vendors who were previously used to charging many thousands of pounds for their development boards now only charging hundreds.

In my opinion the RPi was a complete disruption to the SBC market. In early 2012 a complete ARM computer system could now be purchased for $35 ( 25) which was substantially cheaper than the best contemporary competitor the Beaglebone $89 ( 60)

To be clear, the reason the RPi succeeded (millions sold, household name) was not on price alone but also the large amount of good supporting software and how easy it was made for teachers and makers to use.

There were several areas that the RPi managed to change perceived issues into opportunities for using what was already available or third parties to provide. There were also several issues raised about the original RPi:

With that acceptance there have been many, many new SBC coming to market with better peripherals and increasingly competitive pricing. These are technically not clones as none of them use the Broadcom processor of the RPi but they often share many features and possibly even a compatible expansion header.

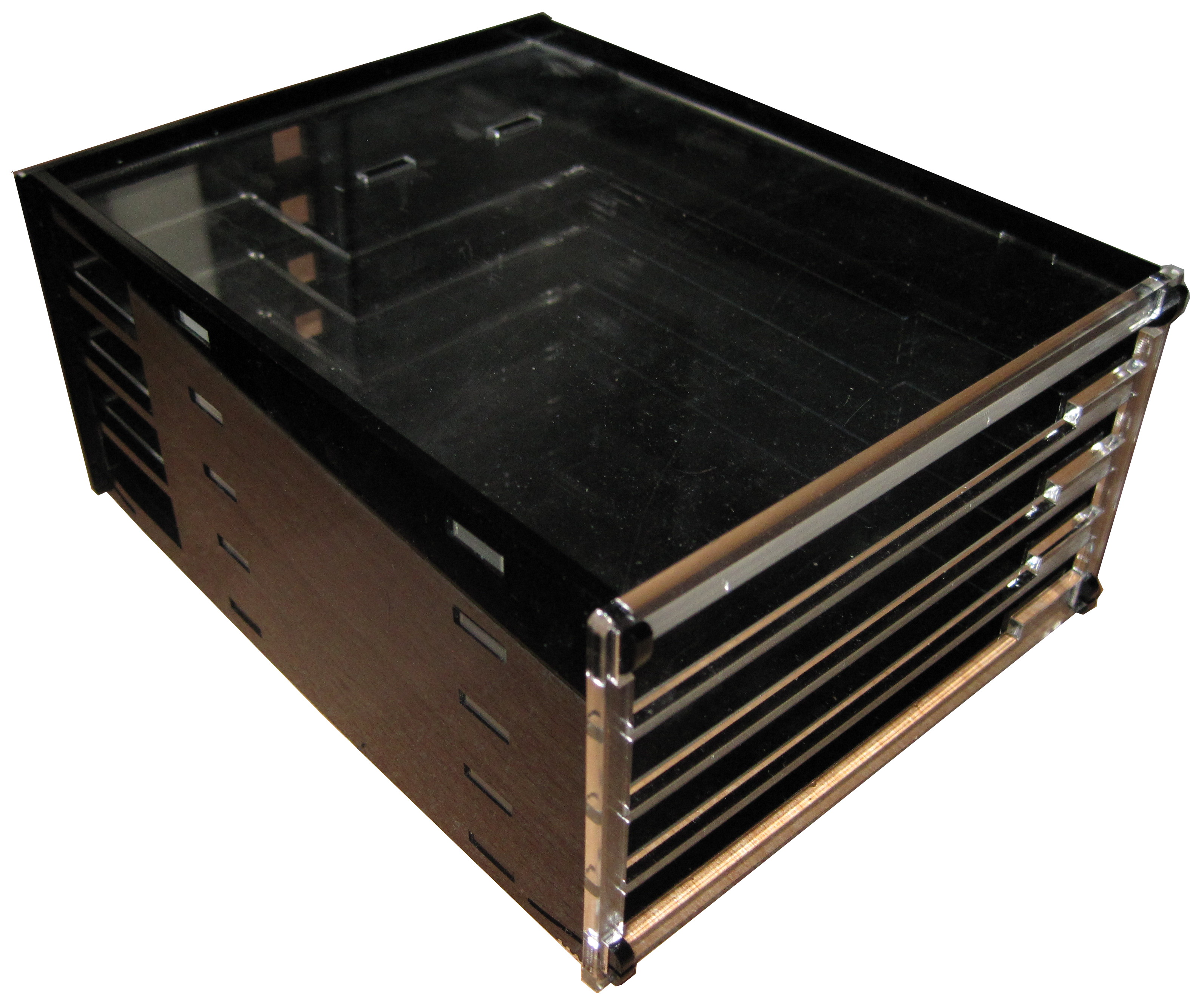

The Banana Pi was one of these copycats which I acquired for a similar price as an RPi in 2014. The main processor of this system was a 1Ghz dual core Allwinner A20 processor (a considerable advance on the 700MHz single core of the original RPi) coupled to a gigabyte of memory. Additionally the board benefited from having SATA and gigabit Ethernet MAC which made for a much more versatile system. Various third parties filled in the missing peripherals including my own attempt at a case.

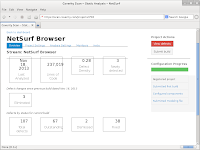

I acquired a cubietruck for the NetSurf project to use as a build node in their CI system this is again based on the A20 but with more memory and somewhat better peripheral support but at a substantial cost over the Banana Pi.

The most recent addition to this form factor is the introduction by Xunlong Software of the Orange Pi PC. This little board is the same footprint as the RPi2 B+ design but with differing connector placement. The processor is a quad core 1.6GHz Allwinner H3 with a gigabyte of memory and has has Ethernet and USB but no SATA.

The big news about this board though is the price, at $15 ( 10) it resets the price expectations just as the RPi did before it. I was initially sceptical of the quality of the product (or if it would arrive at all) but I have acquired five of these boards and every one of them came well packaged, boxed and in a static bag, just like the RPi does, and they all worked.

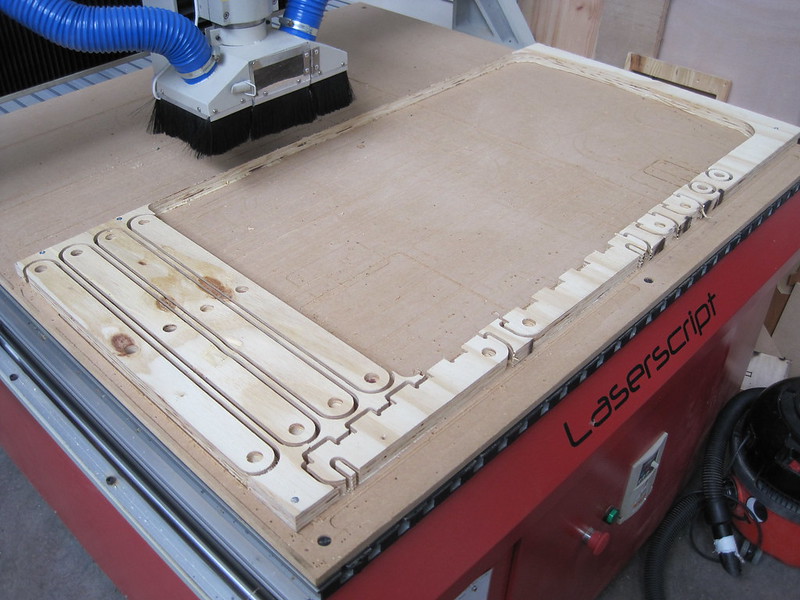

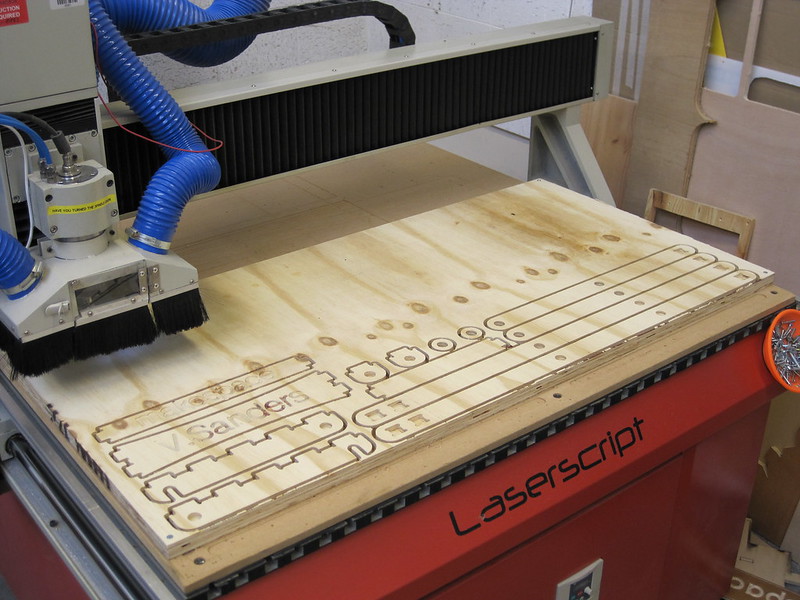

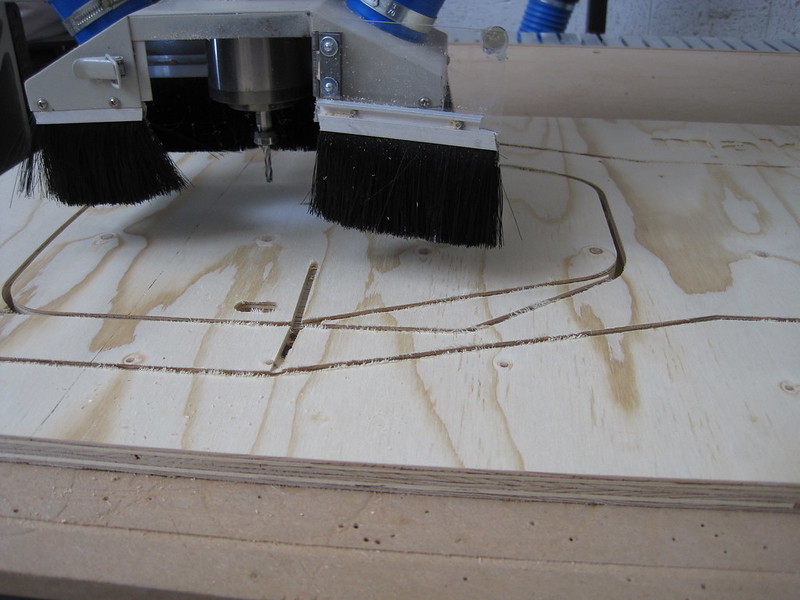

I created a case design based on my RPi slimline case so they would be protected when piled up with all the other boards. The use of a DC barrel jack instead of micro USB for power is better in that the connector is more robust and intended for higher current draws but does mean additional leads are needed. There are, however, two flies in the ointment, neither are showstoppers but make the board a little more difficult to use.

One is a simple necessity of a substantial heatsink on the H3 processor. Initially I used a small 20 x 20mm copper heatsink (around 800mm square surface area) but this was insufficient under full load. I did not want to have to use a fan so I milled some slots into copper round bar, then cut off sections and faced them on a lathe. The completed design had more than 2200 square mm surface area and cost around $2.5 ( 1.5) in material (and a couple of hours in the workshop but that was fun and I made something)

One is a simple necessity of a substantial heatsink on the H3 processor. Initially I used a small 20 x 20mm copper heatsink (around 800mm square surface area) but this was insufficient under full load. I did not want to have to use a fan so I milled some slots into copper round bar, then cut off sections and faced them on a lathe. The completed design had more than 2200 square mm surface area and cost around $2.5 ( 1.5) in material (and a couple of hours in the workshop but that was fun and I made something)

The second issue and arguably much more serious is that of software. Let me be honest, it is dreadful, I mean very bad indeed. The images provided from the Orange Pi website are some of the worst examples of "do something quick" I have experienced.

Fortunately a user on the forums named loboris decided to create scripts that generate a Debian (and Ubuntu and Fedora) distribution images that can be installed from SD card. He relies on a somewhat patched 3.4 kernel full of Allwinner vendor changes and the inevitable binary blobs for the Marli GPU but the result does work.

I have had a few units acting as distcc compiler slaves for two weeks now at 100% CPU loading and they are still running. The processor does not get overly warm with the heatsink installed and Debian behaves just fine. The main caveat being that it is definitely not going to work if you try and update the kernel through packaging.

The state of software support and Xunlong Software relying on a forum user to complete their product does tarnish an otherwise impressive and possibly market changing SBC.

Perhaps I expect too much for fifteen bucks? I guess when the cost of the case, heatsink, cables and memory storage card is similar to the rest of the computer there is simply no margin left for anything else.

In conclusion hopefully this brief overview has provided some insight into what is available in this market and that the Raspberry Pi is indeed not the only option.

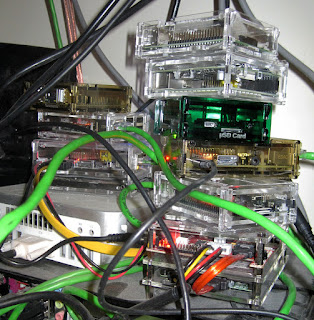

I finish with an image is of my ARM build farm consisting of every SBC I mention here (including a couple of RPi)

Arguably the Raspberry Pi (RPi) was responsible for starting this trend. Before RPi there were small development boards available, I was even involved in producing some of them, none of these really became a big thing and were principally vehicles for silicon vendors to showcase their SoC in an accessible way. When I say accessible I mean for silicon vendors who were previously used to charging many thousands of pounds for their development boards now only charging hundreds.

In my opinion the RPi was a complete disruption to the SBC market. In early 2012 a complete ARM computer system could now be purchased for $35 ( 25) which was substantially cheaper than the best contemporary competitor the Beaglebone $89 ( 60)

To be clear, the reason the RPi succeeded (millions sold, household name) was not on price alone but also the large amount of good supporting software and how easy it was made for teachers and makers to use.

There were several areas that the RPi managed to change perceived issues into opportunities for using what was already available or third parties to provide. There were also several issues raised about the original RPi:

- no case

- no integrated storage

- not having an x86 processor

- being a slow processor

- limited peripheral support

- no real time clock

- not supplying keyboards, mice

- not providing displays.

With that acceptance there have been many, many new SBC coming to market with better peripherals and increasingly competitive pricing. These are technically not clones as none of them use the Broadcom processor of the RPi but they often share many features and possibly even a compatible expansion header.

The Banana Pi was one of these copycats which I acquired for a similar price as an RPi in 2014. The main processor of this system was a 1Ghz dual core Allwinner A20 processor (a considerable advance on the 700MHz single core of the original RPi) coupled to a gigabyte of memory. Additionally the board benefited from having SATA and gigabit Ethernet MAC which made for a much more versatile system. Various third parties filled in the missing peripherals including my own attempt at a case.

I acquired a cubietruck for the NetSurf project to use as a build node in their CI system this is again based on the A20 but with more memory and somewhat better peripheral support but at a substantial cost over the Banana Pi.

The most recent addition to this form factor is the introduction by Xunlong Software of the Orange Pi PC. This little board is the same footprint as the RPi2 B+ design but with differing connector placement. The processor is a quad core 1.6GHz Allwinner H3 with a gigabyte of memory and has has Ethernet and USB but no SATA.

The big news about this board though is the price, at $15 ( 10) it resets the price expectations just as the RPi did before it. I was initially sceptical of the quality of the product (or if it would arrive at all) but I have acquired five of these boards and every one of them came well packaged, boxed and in a static bag, just like the RPi does, and they all worked.

I created a case design based on my RPi slimline case so they would be protected when piled up with all the other boards. The use of a DC barrel jack instead of micro USB for power is better in that the connector is more robust and intended for higher current draws but does mean additional leads are needed. There are, however, two flies in the ointment, neither are showstoppers but make the board a little more difficult to use.

One is a simple necessity of a substantial heatsink on the H3 processor. Initially I used a small 20 x 20mm copper heatsink (around 800mm square surface area) but this was insufficient under full load. I did not want to have to use a fan so I milled some slots into copper round bar, then cut off sections and faced them on a lathe. The completed design had more than 2200 square mm surface area and cost around $2.5 ( 1.5) in material (and a couple of hours in the workshop but that was fun and I made something)

One is a simple necessity of a substantial heatsink on the H3 processor. Initially I used a small 20 x 20mm copper heatsink (around 800mm square surface area) but this was insufficient under full load. I did not want to have to use a fan so I milled some slots into copper round bar, then cut off sections and faced them on a lathe. The completed design had more than 2200 square mm surface area and cost around $2.5 ( 1.5) in material (and a couple of hours in the workshop but that was fun and I made something)The second issue and arguably much more serious is that of software. Let me be honest, it is dreadful, I mean very bad indeed. The images provided from the Orange Pi website are some of the worst examples of "do something quick" I have experienced.

Fortunately a user on the forums named loboris decided to create scripts that generate a Debian (and Ubuntu and Fedora) distribution images that can be installed from SD card. He relies on a somewhat patched 3.4 kernel full of Allwinner vendor changes and the inevitable binary blobs for the Marli GPU but the result does work.

I have had a few units acting as distcc compiler slaves for two weeks now at 100% CPU loading and they are still running. The processor does not get overly warm with the heatsink installed and Debian behaves just fine. The main caveat being that it is definitely not going to work if you try and update the kernel through packaging.

The state of software support and Xunlong Software relying on a forum user to complete their product does tarnish an otherwise impressive and possibly market changing SBC.

Perhaps I expect too much for fifteen bucks? I guess when the cost of the case, heatsink, cables and memory storage card is similar to the rest of the computer there is simply no margin left for anything else.

In conclusion hopefully this brief overview has provided some insight into what is available in this market and that the Raspberry Pi is indeed not the only option.

I finish with an image is of my ARM build farm consisting of every SBC I mention here (including a couple of RPi)

The annual Debian developer meeting took place in Portland, Oregon, 23 to 31

August 2014.

The annual Debian developer meeting took place in Portland, Oregon, 23 to 31

August 2014.

Unfortunately I was not able to attend debconf this year but thanks to the

awesome video team the all the talks

Unfortunately I was not able to attend debconf this year but thanks to the

awesome video team the all the talks

![Isaac Newton by Sir Godfrey Kneller [Public domain], via Wikimedia Commons Isaac Newton by Sir Godfrey Kneller [Public domain], via Wikimedia Commons](http://upload.wikimedia.org/wikipedia/commons/3/39/GodfreyKneller-IsaacNewton-1689.jpg)